Sometimes we fail to appreciate just how much business models in the connectivity and computing businesses are related to, driven by or enabled by the ways we implement technology. The best example is the development of “over the top” or “at the edge” business models.

When the global connectivity industry decided to adopt TCP/IP as its next generation network, it also embraced a layered approach to computing and network functions, where functions of one sort are encapsulated. That means internal functions within a layer can be changed without requiring changes in all the other layers and functions.

That has led to the reality that application layer functions are separated from the details of network transport or computing. In other words, Meta and Google do not need the permission of any internet service providers to connect its users and customers.

Perhaps we might eventually come to see that the development of remote, cloud-based computing leads to similar connectivity business model changes. Practical examples already abound. E-commerce has disintermediated distributors, allowing buyers and sellers to conduct transactions directly.

Eventually, we might come to see owners of digital infra as buyers and sellers in a cloud ecosystem enabled by marketplaces that displace older retail formats.

Where one telco or one data center might have sold to customers within a specific geographic area, we might see more transactions conducted where customers and seller transactions are mediated by a retail platform, and not conducted directly between an infra supplier (computing cycles, storage, connectivity) and its customers or users.

In any number of retail areas, this already happens routinely. People can buy lodging, vehicle rentals, other forms of transportation, clothing, professional services, grocery and other consumer necessities or discretionary items using online marketplaces that aggregate buyers and sellers.

So one wonders whether this also could be replicated in the connectivity or computing businesses at a wider level. And as with IP and layers, such developments would be linked to or enabled by changes in technology.

40 years ago, if asked to describe a mass market communications network, one would likely have talked about a network of class 4 voice switches at the core of the network, with class 5 switches for local distribution to customers. So the network was hierarchical.

That structure also was closed, the authorized owners of such assets being restricted, by law, to a single provider in any geography. Also, all apps and devices used on such networks had to be approved by the sole operator in each area.

source: Revesoft

The point is that there was a correspondence between technology form and business possibility.

If asked about data networks, which would have been X.25 at that time, one would have described a series of core network switches (packet switching exchanges) that offload to local data circuit terminating equipment (functionally equivalent to a router). Think of the PSE as the functional equivalent of the class 4 switches.

source: Revesoft

Again, there was a correspondence between technology and business models. Data networks could not yet be created “at the edge of the network” by enterprises themselves, as ownership of the core network switches was necessary. Frame relay, which succeeded X.25 essentially followed the same model, as did ATM.

The adoption of IP changed all that. In the IP era, the network itself could be owned and operated by an enterprise at the edges, without the need to buy a turned-up service from a connectivity provider. That is a radically different model.

At a device level one might say the backbone network now is a mesh network of routers that take the place of the former class 4 switches, with flatter rather than hierarchical relationships between the backbone network elements.

source: Knoldus

Internet access is required, but aside from that, entities can build wide area networks completely from the edges of the network. That of course affects WAN service provider revenues.

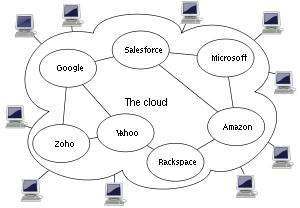

These days, we tend to abstract almost everything: servers, switches, routers, optical transport and all the network command and control, applications software and data centers. So the issue is whether that degree of abstraction, plus relative ease of online marketing, sales, ordering, fulfillment and settlement, creates some new business model opportunities.

source: SiteValley

As has been the case for other industries and products, online sales that disintermediate direct sales by distributors would seem to be an obvious new business model opportunity. To be sure, digital infra firms always have used bilateral wholesale deals and sales agents and other distributors.

What they have not generally done is contribute inventory to third party platforms as a major sales channel. Logically, that should change.