Among the obvious changes in connectivity provider business models over the past 30 years is the diminished role of variable revenue, compared to fixed components. The companion change is a switch from usage-based charging to flat-rate pricing, independent of usage.

Both those changes have huge consequences. The big change is that variable usage no longer can be matched to comparable revenue upside. In other words, higher usage of network resources does not automatically result in higher revenue, as once was the case.

And that is why provisioned data capacity of networks keeps growing, even if revenue per account remains relatively flat. That also is why network capital investment has begun to creep up.

So consider what happens when markets saturate: when every household and person already buys internet access, mobility service and mobile broadband. When every consumer who wants landline voice and linear video already buys it, where will growth come from?

The strategic answer has to be “new services and products.” Tactically, it might not matter whether revenue from such services is based on variable (consumption based) or fixed value (flat rate charge to use). Eventually, it will matter whether usage can be matched to variable charges for usage.

Consider that most cloud computing services (infrastructure, services or platform) feature variable charges based on usage, even if some features are flat rated. For the most part, data center revenue models are driven by usage and variable revenue models.

Connectivity providers have no such luxury.

Though there always has been a mix: fixed charges for “lines and features” but variable charges for long distance usage in the voice era, in the internet era the balance has shifted.

Consider what has happened with long distance voice, which is mostly variable, mobile service, which is partly variable, partly fixed, or internet access or video.

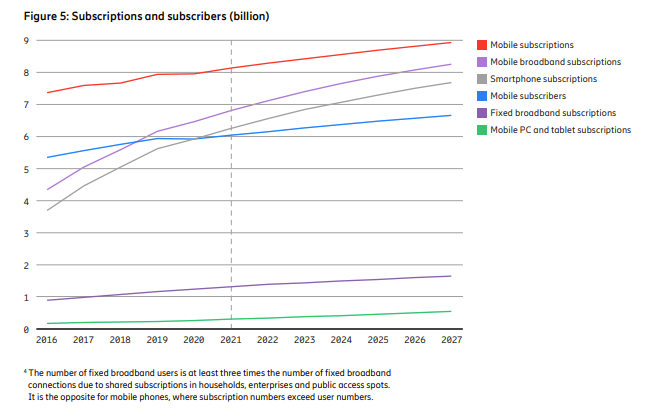

Globally, mobile subscriptions, largely a “fixed” revenue stream--with flat rate recurring charges-- are a key driver of retail revenue growth. And though mobile internet access is mostly a flat rate service (X gigabytes for a flat fee), uptake is variable in markets where most consumers do not routinely use it.

source: Ericsson

And make no mistake, mobile subscriptions, followed by uptake of mobile broadband, drive retail revenue in the global communications market. Fixed network broadband, the key service now provided by any fixed network, lags far behind.

As early as 2007, in the U.S. market, long distance voice, which once drove half of total revenue and most of the profit, had begun its decline, with mobility subscriptions rising to replace that revenue source.

source: FCC

At a high level, that is mostly a replacement of variable revenue, based on usage, with fixed revenue, insensitive to usage.

As a practical matter, internet access providers cannot price usage of applications consumed as they once charged for international voice minutes of use. For starters, “distance” no longer matters, and distance was the rationale for differentiated pricing.

Network neutrality rules in many markets prohibit differential pricing based on quality of service, so quality of connections or sessions is not possible, either. Those same rules likely also make any sort of sponsored access illegal, such as when an app provider might subsidize the cost of internet access used to access its own services.

Off-peak pricing is conceivable, but the charging mechanisms are probably not available.

It likely also is the case that the cost of metering is higher than the incremental revenue lift that might be possible, even if consumers would tolerate it.

The competitive situation likely precludes any single ISP from moving to any such differential charging mechanisms, as well.

In other words, the cost of supporting third party or owned services, while quite differentiated in terms of network capacity required, cannot actually be matched by revenue mechanisms that could vary based on anything other the total amount of data consumption.

Equally important, most ISPs do not own any of the actual apps used by their access customers, so there is no ability to participate in recurring revenues for app subscriptions, advertising or commerce revenues.

All of that is part of the drive to raise revenues by having governments allow taxation of a few hyperscale app providers that drive the majority of data consumption, with the proceeds being given to ISPs to fund infrastructure upgrades.

Ignore for the moment the different revenue per bit profiles of messaging, voice, web browsing, social media, streaming music or video subscriptions. Text messaging has in the past had the highest revenue per bit, followed by voice services.

Subscription video always has had low revenue per bit, in large part because, as a media type, it requires so much bandwidth, while revenue is capped by consumer willingness to pay. Assume the average TV viewer has the screen turned on for five hours a day.

That works out to 150 hours a month. Assume an hour of standard definition video streaming (or broadcasting, in the analog world) consumes about one gigabyte per hour. That represents, for one person, consumption of perhaps 150 Gbytes. Assume overall household consumption of 200 Gbytes, and a monthly data cost of $50 per month.

Bump quality levels up to high definition and one easily can double the bandwidth consumption, up to perhaps 300 GB.

That suggests a “cost”--to watch 150 hours of video--of about 33 cents per gigabyte, with retail price in the dollars per gigabyte range.

Voice is something else. Assume a mobile or fixed line account represents about 350 minutes a month of usage. Assume the monthly recurring cost of having voice features on a mobile phone is about $20.

Assume data consumption for 350 minutes (5.8 hours a month) is about 21 MB per hour, or roughly 122 MB per month. That implies revenue of about $164 per consumed gigabyte.

The point is that there are dramatic differences in revenue per bit to support both owned and third party apps and services.

source: TechTarget

In fact, the disparity between text messaging and voice and 4K video is so vast it is hard to get them all on the same scale.

Sample Service and Application Bandwidth Comparisons |

Segment | Application or Service Name | Mbps |

Consumer mobile | SMS | 0.13 |

Consumer mobile | MMS with video | 100 |

Business | IP telephony (1-hour call) | 28,800 |

Residential | Social networking (1 hour) | 90,000 |

Residential | Online music streaming (1 hour) | 72,000 |

Consumer mobile | Video and TV (1 hour) | 120,000 |

Residential | Online video streaming (1 hour) | 247,500 |

Business | Web conferencing with webcam (1 hour) | 310,500 |

Residential | HD TV programming (1 hour, MPEG 4) | 2,475,000 |

Business | Room-based videoconferencing (1 hour, multi codec telepresence) | 5,850,000 |

source: Cisco

At a high level, as always is the case, one would prefer to operate a business with the ability to price according to usage. Retail access providers face the worst of all possible worlds: ever-growing usage and essentially fixed charges for that usage.

Unless variable usage charges return, to some extent, major market changes will keep happening. New products and services can help. But it will be hard for incrementally small new revenue streams to make a dent if one assumes that connectivity service providers continue to lose about half their legacy revenues every decade, as has been the pattern since deregulation began.

Consolidation of service providers is already happening. A shift of ownership of digital infrastructure assets is already happening. Stresses on the business model already are happening.

Will we eventually see a return to some form of regulated communications models? And even if that is desired, how is the model adjusted to account for ever-higher capex? Subsidies have always been important. Will that role grow?

And how might business models adjust to accommodate more regulation or different subsidies? A delinking of “usage” from “ability to charge for usage” makes answers for those questions inevitable, at some point.

How many businesses or industries could survive 40-percent annual increases in demand and two-percent annual increases in revenue?