"The more things change, the more they stay the same" might well apply to the connectivity business, despite all efforts to rebrand and reposition the industry as having a value proposition based on something more than "we connect you."

Consider the notion that 6G mobile networks will be about “experience,” as suggested by Interdigital and analysts at Omdia. At some level, this is the sort of advice and thinking we see all the time in business, where suppliers emphasize “solutions” rather than products and virtually all suppliers seek to position them as providers of higher-order value.

The whole point of home broadband or smartphones with fast internet access is that those capabilities support the user experience of applications.

And many have been talking about that concept for a while. “The end of communications services as we know them” is the way analysts at the IBM Institute for Business Value talk about 5G and edge computing, for example.

To be sure, connectivity is not the only business where practitioners are advised to focus on end user benefits, solutions or experiences. But connectivity is among businesses where perceived value, though always said to be “essential” to modern life, also is challenged by robust competition and the ability to create product substitutes.

One of the realities of the internet era is that although end user data consumption keeps climbing, monetization of that usage by communications service providers is problematic. Higher usage might lead to incremental revenue growth, but at a rate far less than the consumption rate of growth.

That is the opposite of the relationship between consumption and revenue in the voice era, when linear consumption growth automatically entailed linear revenue growth. Though there was some flat-rate charging, most of the revenue was generated by usage of long-distance calling services.

“On the surface, exponential increases in scale would seem like a good thing for CSPs, but only if pricing keeps pace with the rate of expansion,” the institute says. “History and data suggest it will not.”

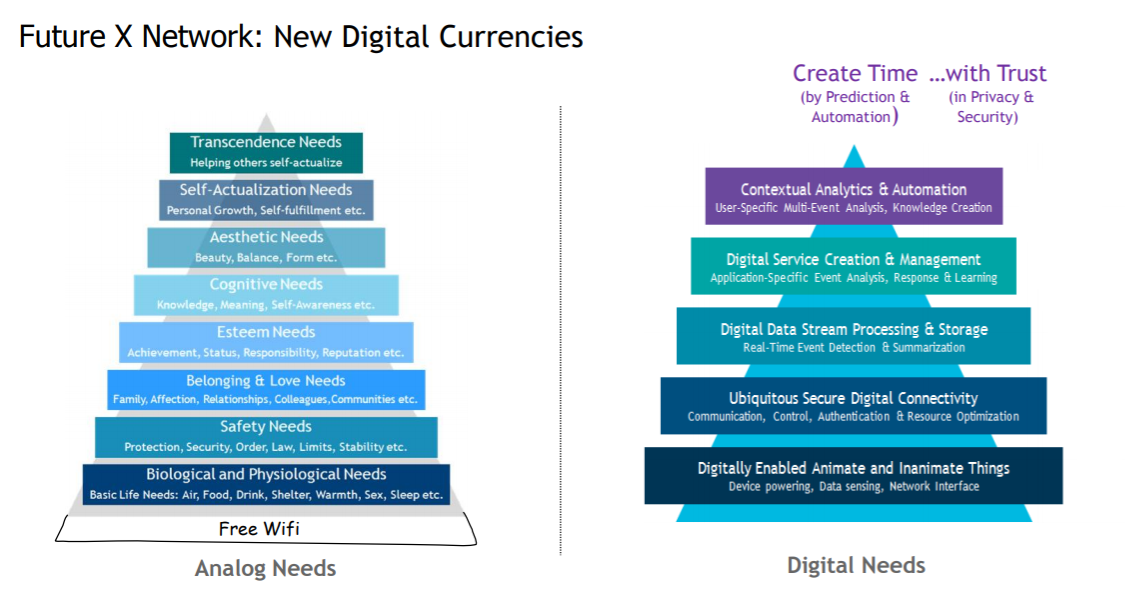

Indeed, Nokia Bell Labs researchers have been saying for some time that "creating time"

is one way of illustrating the difference between core value propositions in the legacy and today’s market. “We connect you” has been the traditional value prop. But that could shift to something else as “connectivity” continues to face commoditization pressures.

source: Nokia Bell Labs

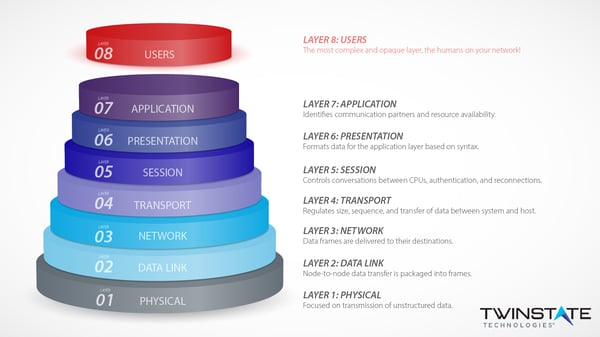

The growing business model issue in the internet era is that conventional illustrations of the computing stack refer only to the seven or so layers that pertain to the “computing” or “software” functions. Human beings have experiences at some level above the “applications” layer of the software stack, and business models reside above that.

Likewise, physical layer communication networks and devices make up a layer zero that underpins the use of software that requires internet or IP network access. The typical illustration of how software is created using layers only pertains to software as a product.

Software has to run on appliances or machines, and products are assembled or created using software, at additional layers above the seven-layer OSI or TCP/IP models, in other words.

So we might call physical infra and connectivity services as a “layer zero” that supports software layers one to seven. And software itself supports products, services and revenue models above layer seven of the software stack.

Some humorously refer to “layer eight” as the human factors that shape the usefulness of software, for example. Obviously, whole whole business operating models can be envisioned using eight to 10 layers as well.

source: Twinstate

The point is that the OSI software stack only applies to the architecture for creating software. It does not claim to show the additional ways disaggregated and layered concepts apply to firms and industries.

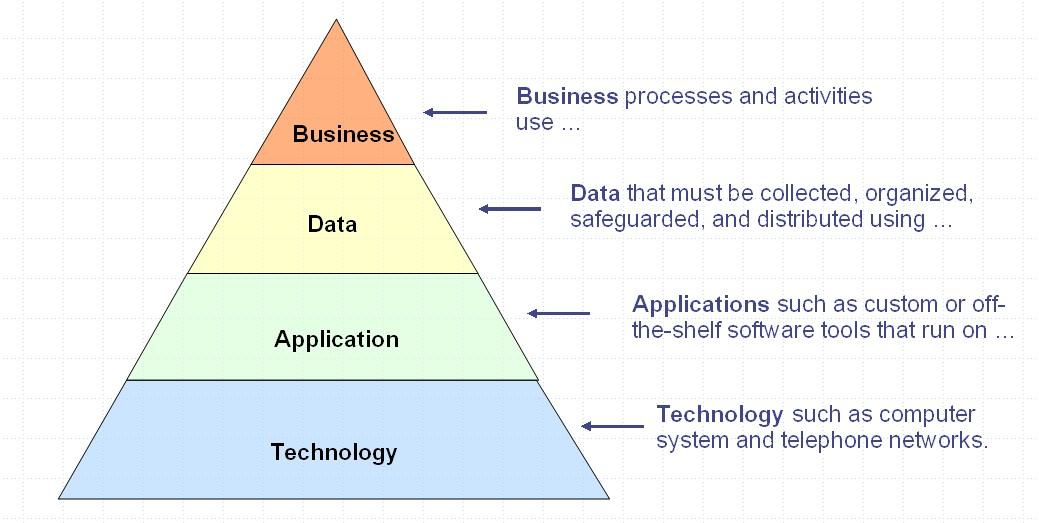

source: Dragon1

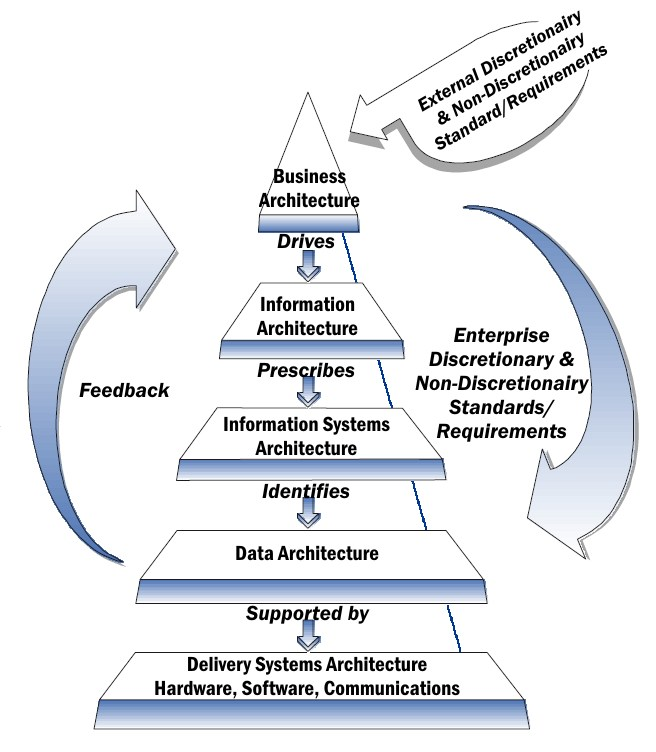

Many have been using this general framework for many decades, in the sense of business outcomes driving information and computing architecture, platforms, hardware, software and communications requirements.

source: Wikipedia

In a nutshell, the connectivity industry’s core problem in the internet era is the relative commoditization of connectivity, compared to the perceived value create at other layers.

Layer zero is bedeviled by nearly-free or very-low-cost core services that have developed over the last several decades as both competition and the internet have come to dominate the business context.

Note that the Bell Labs illustration based the software stack on the use of “free Wi-Fi.” To be sure, Wi-Fi is not actually free. And internet connectivity still is required. But you get the idea: the whole stack (software and business) rests, in part, on connectivity that has become quite inexpensive, on an absolute basis or in terms of cost per bit.

Hence the language shift from “we connect you” to other values. That might include productivity or experience, possibly shifting beyond sight and sound to other dimensions such as taste and touch. The point is that the industry will be searching for better ways to position its value beyond “we connect you.”

And all that speaks to the relative perception of the value of “connections.” As foundational and essential as that might be, “mere” connectivity is not viewed as an attractive value proposition going forward.

It remains to be seen how effective such efforts will be. The other argument is that, to be viewed as supplying more value, firms must actually become suppliers of products and solutions with higher perceived value.

And that tends to mean getting into other parts of the value chain recognized to supply such value. If applications generally are deemed to drive higher financial valuations, for example, then firms have to migrate into those areas, if higher valuations are desired.

If applications are viewed as scaling faster, and producing more new revenue than connectivity, and if suppliers want to be such businesses, then ways should be sought to create more ownership of such assets.

The core problem, as some might present it, is that the “experience” benefits are going to be supplied by the apps themselves, not the network transporting the bits. It is fine to suggest that value propositions extend beyond “connectivity.”

source: Interdigital, Omdia

The recurring problem is that, in a world where layers exist, where functions are disaggregated, connectivity providers are hard pressed to become the suppliers of app and business layer value. So long as connectivity exists, value and experience drivers will reside at higher layers of the business stack.

Unless connectivity providers become asset owners at the higher levels, they will not be able to produce the sensory and experience value that produces business benefits. Without such ownership, the value proposition remains what it has always been: “we connect you.”

If so, the rebranding will fail. Repositioning within the value chain, even if difficult, is required, if different outcomes are to be produced.

So long as humans view the primary communications industry value as "connections," all rebranding focusing on higher-order value will fail. It is the apps that will be given credit for supplying all sorts of new value.